Nvidia researchers have developed a compact neural network that outperforms specialized systems in managing humanoid robots while consuming significantly fewer resources. This system accommodates various input methods, including virtual reality (VR) headsets and motion capture technologies.

The newly introduced HOVER system can handle complex robotic movements with just 1.5 million parameters, whereas typical large language models utilize hundreds of billions of parameters.

The research team trained HOVER within Nvidia's Isaac simulation environment, which accelerates robotic actions by 10,000 times. According to Nvidia researcher Jim Fan, this implies that one year of training in a virtual space can be accomplished in just 50 minutes of real computation using a single graphics processing unit (GPU).

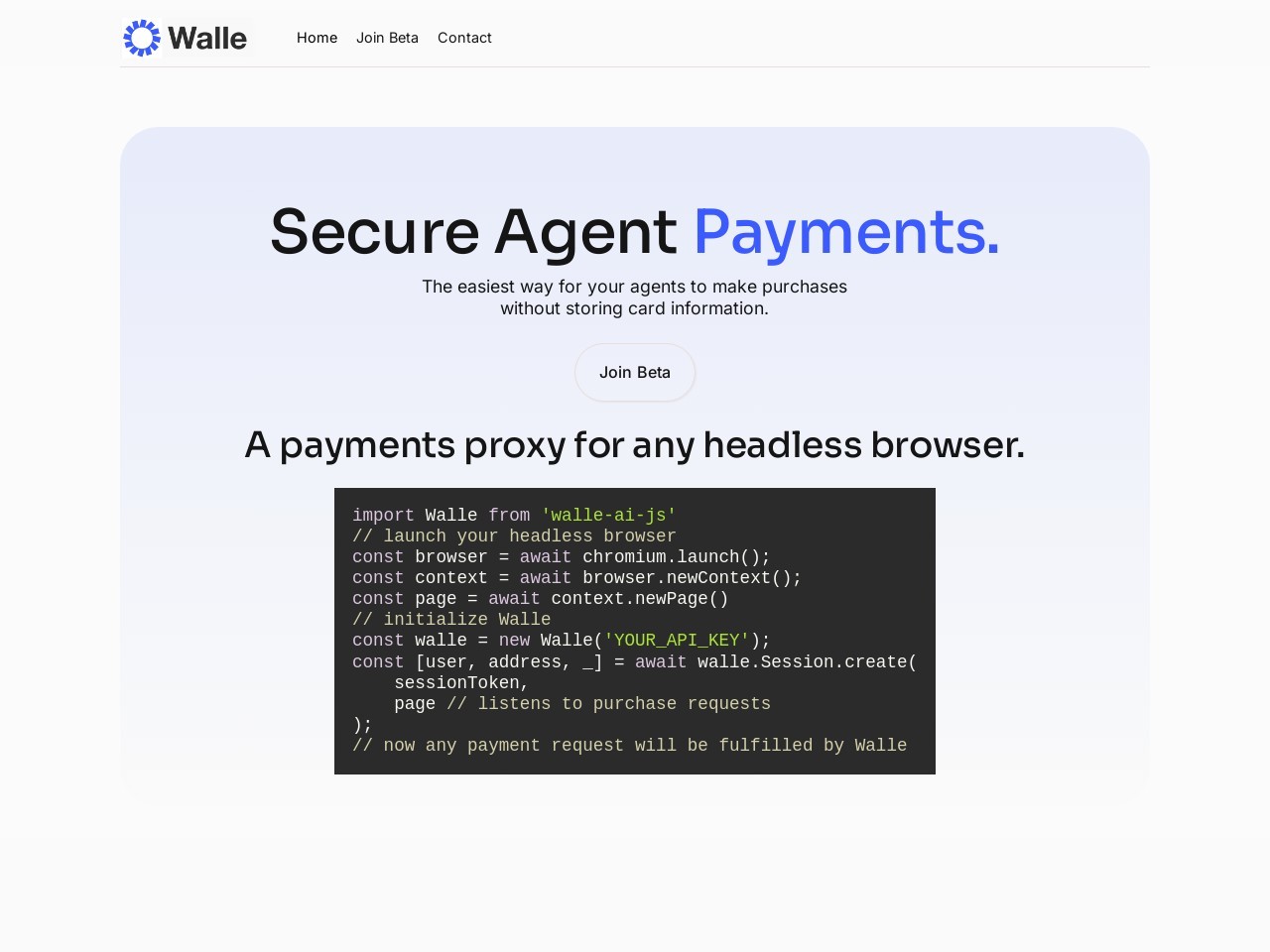

The HOVER system is both compact and versatile. Fan noted that HOVER can achieve zero-shot transfer from simulation environments to physical robots without requiring fine-tuning. The system supports inputs from multiple sources, including head and hand tracking from extended reality (XR) devices like the Apple Vision Pro, full-body positioning from motion capture or RGB cameras, joint angles from exoskeletons, and standard joystick controls.

When employing each control method, HOVER outperforms systems designed for single input types. The paper's lead author, Tairan He, suggests that this may be due to the system's comprehensive understanding of physical concepts such as balance and precise limb control, which it applies across all control modalities.

Built upon the open-source H2O and OmniH2O projects, the HOVER system is compatible with any humanoid robot that can operate within the Isaac simulator. Nvidia has released relevant examples and code on GitHub.