Envision a surveillance camera not only capturing video footage but also comprehending real-time events, accurately distinguishing between everyday activities and potential threats. This futuristic vision is being progressively realized by researchers at Virginia University's College of Engineering and Applied Sciences through their latest achievement—the Semantic and Motion-Aware Spatiotemporal Transformation Network (SMAST). SMAST is an AI-driven intelligent video analyzer that detects human behaviors in videos with unprecedented precision and insight.

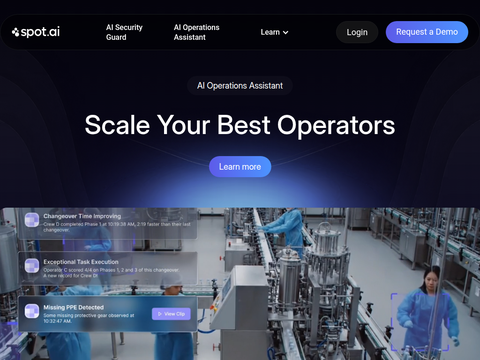

The introduction of the SMAST system is expected to deliver widespread societal benefits across various sectors. From enhancing surveillance systems and bolstering public safety to advancing sophisticated motion tracking in healthcare and improving the navigation capabilities of autonomous vehicles in complex environments, SMAST demonstrates significant application potential.

Scott T. Acton, professor and department head of Electrical and Computer Engineering and the lead researcher on the project, stated, "This AI technology opens the door for real-time action detection in some of the most challenging environments. It represents a breakthrough that can help prevent accidents, improve diagnostics, and even save lives."

So, how does SMAST achieve this breakthrough? Its core lies in AI support and the synergistic operation of two key components. The first is a multi-feature selective attention model, which assists the AI in focusing on the most critical parts of a scene, such as people or objects, while ignoring unnecessary details. This enhances SMAST's accuracy in identifying complex behaviors, enabling it to recognize actions like someone throwing a ball instead of merely moving their arm.

The second essential component is the motion-aware 2D position encoding algorithm, which aids the AI in tracking the movement of objects over time. This algorithm enables SMAST to remember actions within the video and understand their interrelations, thereby accurately identifying complex motions. By integrating these two features, SMAST can accurately recognize intricate human behaviors in real-time, making it more effective in high-risk scenarios such as surveillance, medical diagnostics, or autonomous driving.

SMAST's innovative design redefines how machines detect and interpret human actions. Unlike current systems that struggle to grasp event contexts when processing chaotic, unedited continuous video clips, SMAST can capture the dynamic relationships between people and objects with remarkable precision through AI components capable of learning and adapting from data.

This technological leap means that AI systems can now recognize complex actions such as a runner crossing the street, a doctor performing precise surgery, or security threats in crowded spaces. SMAST has surpassed top-tier solutions across multiple critical academic benchmarks, setting new standards for accuracy and efficiency.

Matthew Korban, a postdoctoral researcher involved in the project, commented, "The societal impact could be tremendous. We are excited to see how this AI technology can transform industries, making video-based systems smarter and capable of real-time understanding."

This research is based on the work published in the IEEE Transactions on Pattern Analysis and Machine Intelligence journal in the paper titled "Semantic and Motion-Aware Spatiotemporal Transformation Network for Action Detection," authored by Matthew Korban, Peter Youngs, and Scott T. Acton from Virginia University.